Chapter Six: Linear Model Selection and Regularization

Conceptual Problems

Problem One

Part a)

The smallest training residual sum of squares (RSS) will occur for the "best subset selection" approach. This is because the algorithm uses the training set RSS to determine which model is the best and it is an exhaustive model (unlike the other two approaches).

Part b)

It is not possible to definitively say which approach result in the model with the lowest test set RSS (lowest prediction error) a priori. However, "best subset selection" method has a large chance of overfitting the data resulting in a low training RSS but a larger test set RSS due to a high variance and low bias. For the other two method the only way to determine which approach may have produced the lowest test RSS indirect approaches such as cross-validation or some sort of information criterion needs to be employed.

Part c)

i. True. By definition the $k+1$ model uses the $k$ models predictors as a subset to build upon.

ii. False. As the $k+1$ step removes a predictor, the $k$ model predictors cannot be a subset.

iii. False. This can be demonstrated by a simple toy model of three variables X_1, X_ and X_3. For $k=1$, backward stepwise selection may deem $X_3$ the least important and remove it. However, the $k=1$ step of forward stepwise selection may determine that $X_3$ singularly describes the data better than than the other two varaiables, and therefore the backward stepwise selection will not always be a subset of forward stepwise selection of the k=1$ step.

iv. False. This follows similar reasons as statement iii.

v. False. This assumes that "forward subset selection" will always select the best predictors. However, the "best subset selection" may not even contain the first selected variable of FSS.

Problem Two

Part a) Lasso Regularization

The true statement is:

Less flexible and hence will give improved prediction accuracy when its increase in bias is less than its decrease in variance.

Part b) Ridge Regularization

The true statement is:

Less flexible and hence will give improved prediction accuracy when its increase in bias is less than its decrease in variance.

Problem Three

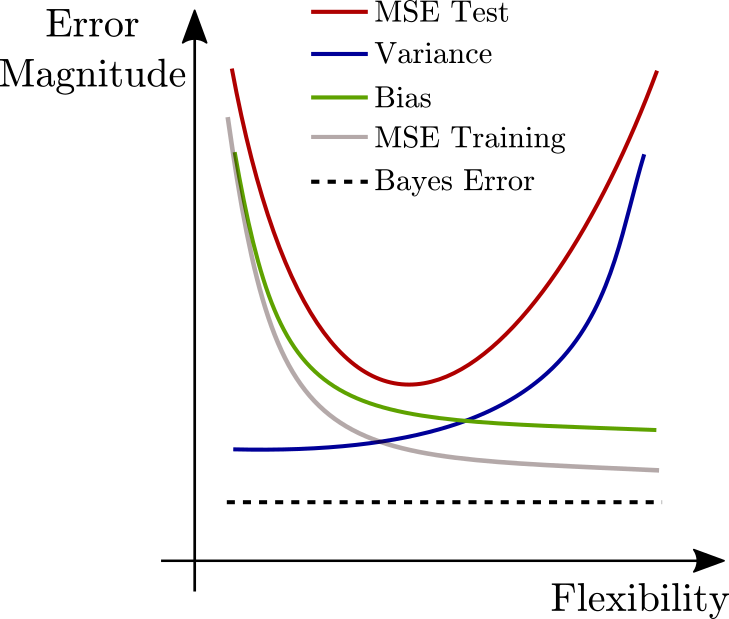

This problem is related to the bias variance tradeoff. Therefore, we include the standard image of how the errors relate to model flexibility below,

Part a) Training RSS

Decrease initially and level out.

As $s$ is inversely related to $\lambda$, lower values of $s$ correspond to a highly constrained (read inflexible) model. Therefore, as $s$ increases and there is more 'budget' for variance, the model becomes more flexible and model fits (and most likely will eventually overfit) the training data where the training error will level out.

Part b) Test RSS

Decrease initially, and then eventually start increasing in a 'U' shape.

This reflects the standard 'U' shape error due as a model flexibility increases (See chapter 2 for more details.)

Part c) Variance

Steadily increase

Part d) Bias

Steadily decrease

Part e) irredicle Error (Bayes Error)

Remains constant

Problem Four

This problem is very similar to problem four except the reverse. As $\lambda$ increases, the models become more constrained and less flexible with ridge regression ($l^2$ norm) rather than lasso. Therefore, without justification,

Part a) Training RSS

Increase Steadily

Part b) Test RSS

Decrease initially, and then eventually start increasing in a 'U' shape.

Part c) Variance

Steadily Decrease

Part d) Bias

Steadily increase

Part e) irredicle Error (Bayes Error)

Remains constant

Problem Five

For this problem the number of data points is $n=2$ and the number of predictors is $p=2$. It also has the constraints: $$ x_{11} = x_{12} \quad x_{22} = x_{21} \\y_1 + y_2 = 0 \\x_{11} + x_{21} = 0 \\x_{22} + x_{12} = 0 $$

Part a)

The ridge penalized RSS (with no intercept due to the constraints) is given by , $$ PRSS_{R} = \sum_{i=1}^{n}\left(y_i - \sum_{j=1}^{p}\left(\beta_{i}x_{ij}\right)\right)^2 + \lambda\sum_{j=1}^{p}\beta_{j}^2 $$ which, as with normal least squares, must be minimized with respect to $\boldsymbol{\beta}$. (Once again these are all estimators but the hats have been dropped).

Part b)

Differentiating the above equation w.r.t. $\boldsymbol{\beta}$ gives, $$ \dfrac{\partial PRSS_{R}}{\partial \beta_1} = 2(-x_{11})(y_1 - (\beta_{1}x_{11} + \beta_{2}x_{12})) + 2(-x_{21})(y_2 - (\beta_{1}x_{21} + \beta_{2}x_{22})) + 2\lambda \beta_1 \\\dfrac{\partial PRSS_{R}}{\partial \beta_2} = 2(-x_{12})(y_1 - (\beta_{1}x_{11} + \beta_{2}x_{12})) + 2(-x_{22})(y_2 - (\beta_{1}x_{21} + \beta_{2}x_{22})) + 2\lambda \beta_2 $$ Setting these to 0 and using the constaints of the problem gives, $$ -x_{11}y_1 + \beta_{1}x_{11}^2 + \beta_{2}x_{11}^2 - x_{22}y_2 + \beta_{1}x_{22}^2 + \beta_{2}x_{22}^2 + \lambda\beta_1 = 0 \\-x_{11}y_1 + \beta_{1}x_{11}^2 + \beta_{2}x_{11}^2 - x_{22}y_2 + \beta_{1}x_{22}^2 + \beta_{2}x_{22}^2 + \lambda\beta_2 = 0 $$ Subtracting the second equation from the first results in, $$ \lambda \beta_1 - \lambda \beta_2 = 0 \\\Rightarrow \beta_1 = \beta_2 $$ regardless of the choice of lambda.

Part c)

This is very similar to part a) however the regularizer is of a different form (norm). The penalized RSS for the lasso regularization is, $$ PRSS_{L} = \sum_{i=1}^{n}\left(y_i - \sum_{j=1}^{p}\left(\beta_{i}x_{ij}\right)\right) + \lambda\sum_{j=1}^{p}|\beta_{j}| $$

Part d)

Similarly there is the same cancellation of the terms when minimizing as in part b). The final equality is, $$ \lambda\left(\dfrac{\partial }{\partial \beta_1}|\beta_1| - \dfrac{\partial }{\partial \beta_2}|\beta_2|\right) = 0 $$

Problem Six

Toy model regularization with $n=p$ and $\textbf{X} = I(n)$ where $I(n)$ is the identity matrix of order $n$.

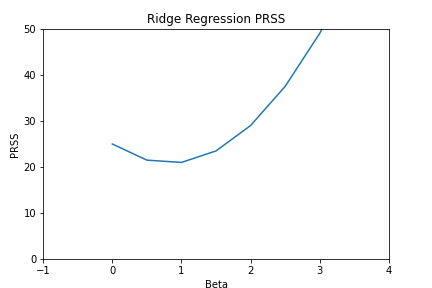

Part a)

Ridge regression in this toy model with $n=1$ is, $$ \begin{aligned} PRSS_{R} &= \left(y_1 - \beta_1\right)^2 + \lambda \beta_1^2 \\&= y_1^2 + \beta_1^2 - 2\beta_1y_1 + \lambda\beta_1^2 \\&= (\lambda + 1)\beta_1^2 -2\beta_1y_1 + y_1^2 \end{aligned} $$

This is just a quadratic equation with no roots. Mimimizing this w.r.t. $\beta_1$, $$ \beta_1 = \dfrac{y_1}{(\lambda + 1)}. $$